What Is TrueZero?

Product Overview

Data is being created (and collected) at a constantly increasing pace, and much of this data is highly sensitive – personal, financial, and medical are all highly regulated categories. Moreover, as more and more granular data is created, information that might not have been considered sensitive a decade ago can now be combined to re-identify individuals and other entities (user agents, IP addresses, telemetry, user behavior, etc).

As your company collects, analyzes, stores, and shares this data essential for your operations, the risk you incur rises along with the impact of a potential security incident (with threats both malicious or accidental, external and internal).

Tokenization

Data tokenization is a broad term that's used to mean different things by different organizations, but broadly:

Tokenization is the process of replacing raw (usually sensitive) data with non-sensitive tokens that represent that data, and which (optionally) can be later "redeemed" to retrieve the original data by authorized parties.

The core advantage of storing and exchanging tokens instead of raw data is that the impact of a data breach is drastically reduced – along with other use-case-specific advantages (e.g. reduced compliance costs associated with handling cardholder data under PCI-DSS regulations).

Tokenization is in wide use across industries that depend on sensitive data, and is being increasingly applied for data minimization and general data security across all industries as data footprints continue to grow. But not all tokenization solutions are created equal...

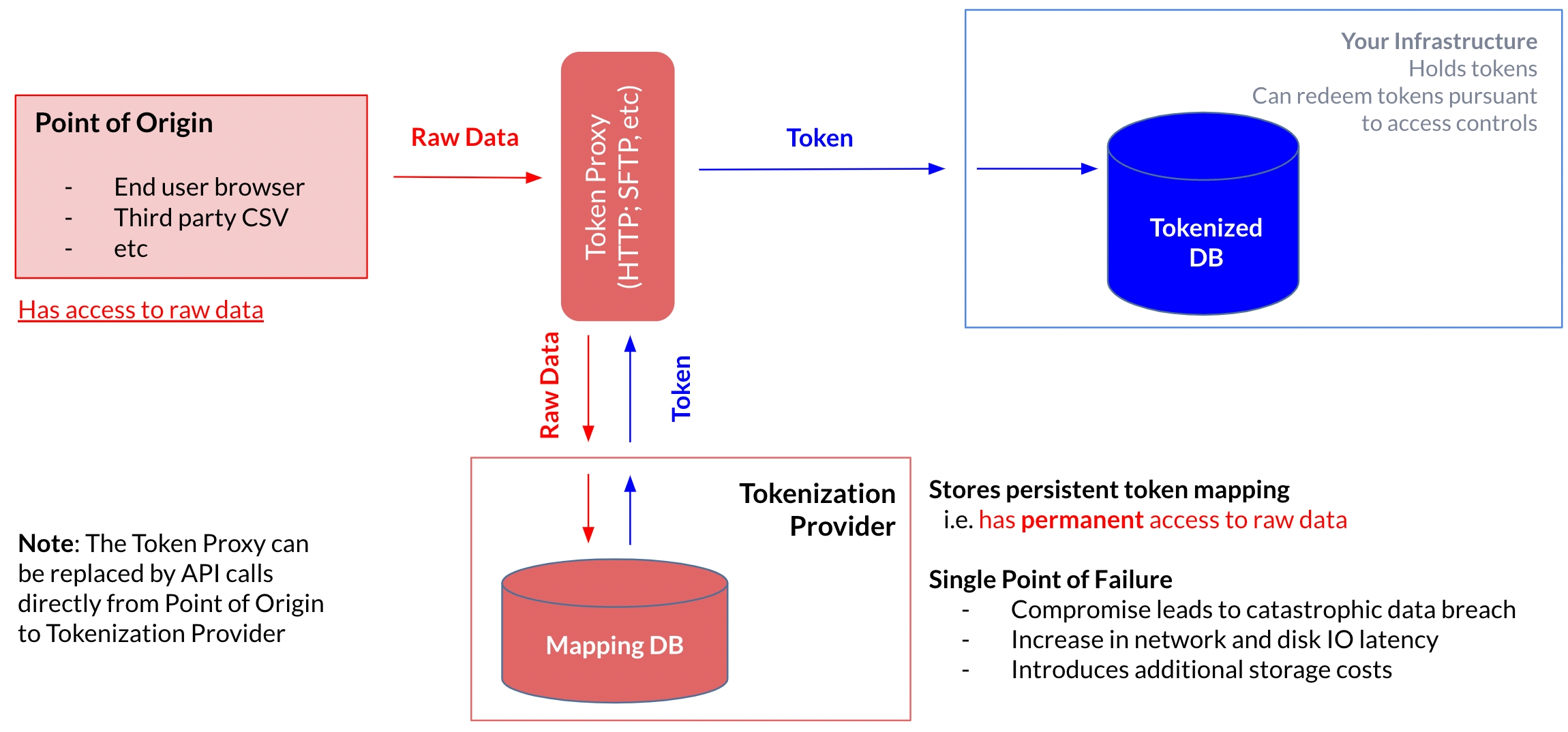

Vaulted Tokenization

The first generation of tokenization providers use a process called "vaulted" tokenization, where the provider accepts sensitive data (either from the customer application or directly from a user) via a custom API call or through a proxy application, stores the raw data in a traditional database with a randomly-generated token as an identifier, and returns a token.

This mechanism successfully offloads some risk from the customer to the provider, but it has a number of downsides:

Raw data is sent to the tokenization provider, so the customer must trust the provider not only to protect the data from exposure, but also not to leverage the data for other purposes beyond storage (e.g. analytics, advertising, etc)

Since a database is involved, disk IO imposes latency (particularly on detokenization) and storage costs get passed along to the customer

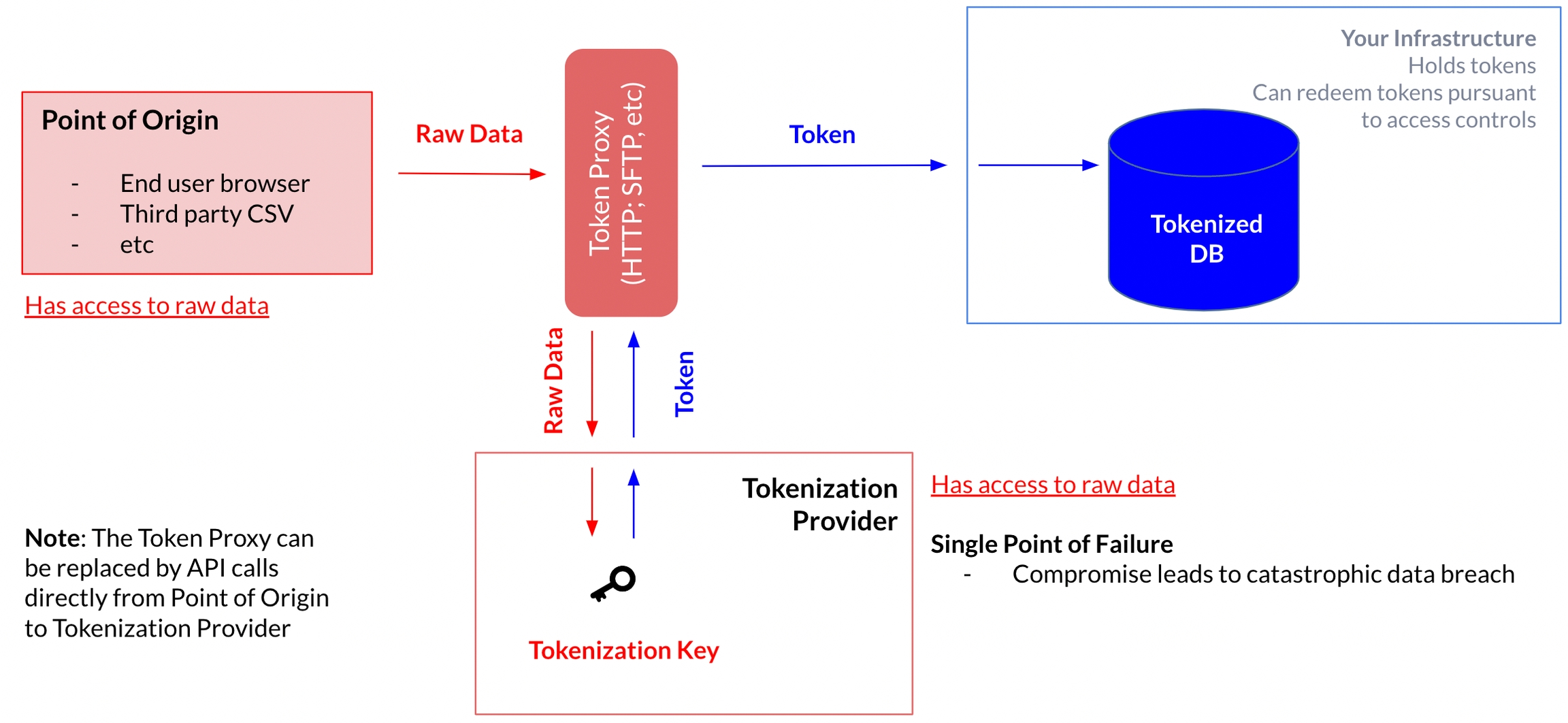

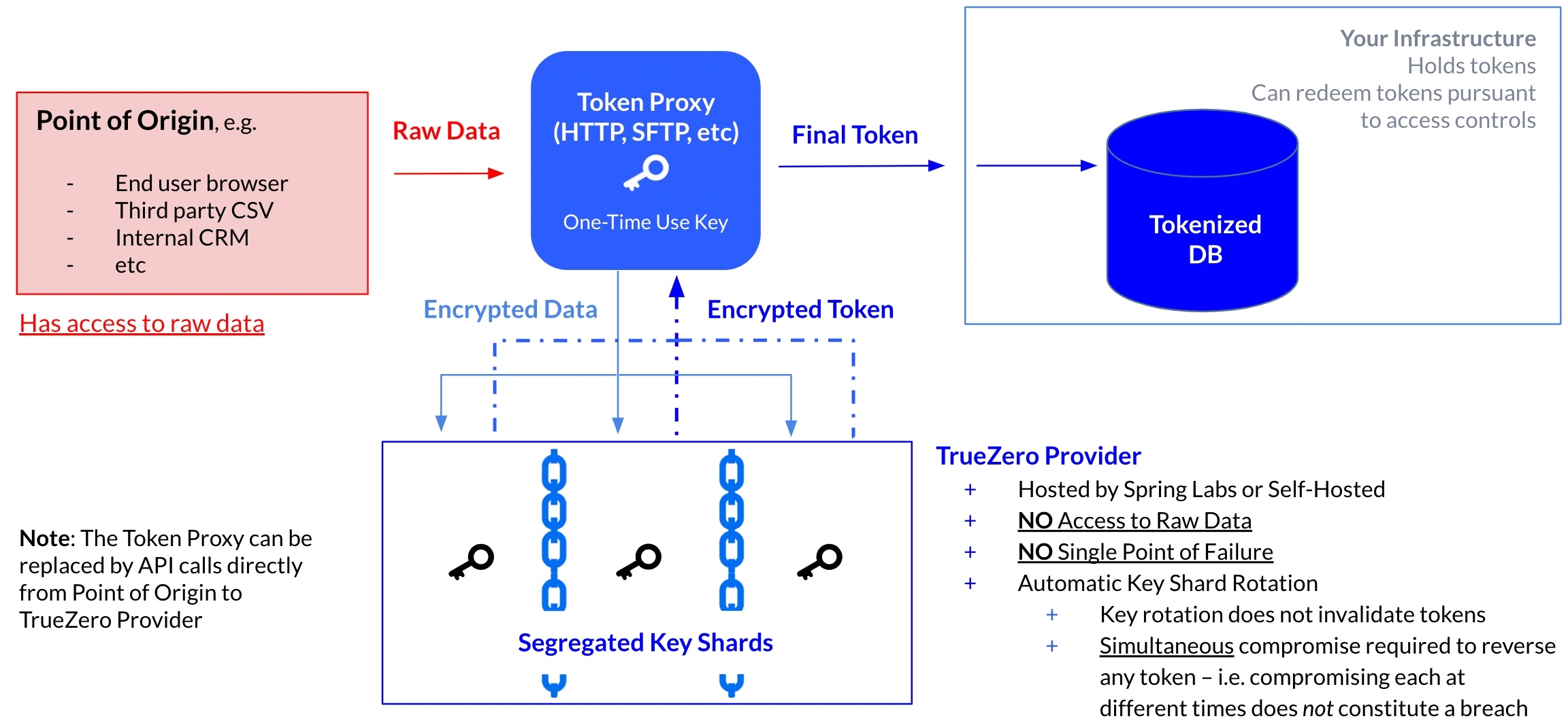

The proxy services in all of the diagrams on this page provide a no-code integration mechanism, but all of these solutions also support direct API calls from the point-of-origin to the Tokenization provider.

Vaultless Tokenization

A newer generation of tokenization providers, attempting to improve on vaulted tokenization's shortcomings, offer "vaultless" tokenization solutions that replace the database component with pure cryptography.

In this approach, instead of generating a random token, the provider simply encrypts the raw data with a secret key and uses the resulting ciphertext as the token. This eliminates the storage costs and latency, it fails to address the biggest concern: the raw data is still sent to the tokenization provider.

TrueZero

The TrueZero solution was designed to solve this same problem without the tradeoffs previous generations of tokenization accepted, specifically:

The TrueZero token provider, whether run in our infrastructure or yours, never has access to the raw data.

Compromise of any single system does not allow a malicious actor to detokenize any token.

Key rotation does not require invalidation of existing tokens and can be automated at any cadence.

Operate faster and with less expensive infrastructure than any competitor.

For lower-level details on the system design and novel cryptographic techniques we used to achieve these unique properties, see How It Works and Automatic Key Rotation – otherwise check out Quick Start to see how easy TrueZero is to integrate.

Last updated